Smart Grid Blog 5 – February 7, 2011

PSU’s third annual Smart Grid class is now about half way through the Syllabus and there seems still so much to consider about this evolving technology and energy delivery approach. It seems that the more we delve into Smart Grid the more we realize how vast the implications are.

After nearly a dozen classes I am also sensing that there is almost too much information being presented. At times the sheer volume of the in-class information and the readings is overwhelming. This information intensity is exacerbated by a disjointed progression of speakers whose availability trumps a more logical progression. That said, let’s return to covering the material offered by PSU’s PA 510 – their exemplary course on the the Smart Grid.

____________________________________________________

This 5th session of the class hosted three distinct presentations. The first was Conrad Eustis’ presentations on regulatory trade off’s presented by the Smart Grid Technology. This was followed by James Mater’s presentation on the interoperability challenges inherent in the technology layers used to link the elements of the Smart grid. And finally Ken Nichols presented his perspective on energy trading in the western section of this continent.

_________________________________________________

– Conrad Eustis

The introduction of the Smart Grid into a regulated electricity distribution system that hasn’t changed much over the last 100 years poses some fundamental questions about investment costs, social equity issues, environmental effects and economic impacts.

The regulators are grappling with questions like:

- should low income electricity users be subsidized?

- who should pay for these subsidies: ratepayers or taxpayers?

- At what cost should we pursue one policy trajectory over another?

- How expensive can we make electricity to preserve the environment?

- To what extent are regional assets accessible to the nation?

These are some of the knotty policy conundrums that regulators are grappling. According to Conrad, decisions by electric industry regulators are mostly based on economics and have to do with cost justifications – either direct (“hard”) costs or indirect (“soft”) costs.

The delivery of electricity entails much investment in physical infrastructure to permit energy delivery at a sufficient capacity to support society’s needs. Implicit in these decisions is the trade off between capital investment and operations & management costs. The more we invest in expensive capitol equipment the more we can reduce “O&M” costs. That’s one of the trade offs. Another might be represented by the how much we invest to create jobs? Or what investment is justified to preserve environmental health. To what degree should industrial or commercial users subsidize residential users? Or whether urban users should help pay for rural users? What about the acceptable cost of supporting an infrastructure that doesn’t compromise our national security?

To the extent that there are laws that require certain investments, no justifications are needed by the regulators. But there remains much “art” in how the social and environmental costs are included in regulators’ calculations. According to Conrad, many policy decisions are influenced by emotion or the “Movement Du Jour”.

But fundamentally regulators’ decisions are based on serving the public interest at a fair and reasonable price. In many cases the dynamic of a free market is considered to be the more efficient way to allocate resources, but in a regulated industry we need to ask whether there isn’t a cheaper way to achieve the desired end. That’s the whole point of having a regulated industry in the first place.

In general regulators are concerned with managing the costs of delivering electricity – from a financial perspective, from a social perspective and increasingly from an environmental perspective as well. Their mandates are usually circumscribed by the concept of delivering electricity at a fair and reasonable prices and supported by prudent investments.

Cost justifications can be successfully argued if it can be shown that the alternative energy source is less expensive than the conventional source, that the capacity is cheaper, or that the O & M costs are less. Indirect cost arguments might involve claiming that the expense was justified based on the job creation that the investments triggered. Increasingly environmental costs are also included – if they have not already been codified into environmental regulations that must be heeded. But as suggested above, the indirect costs of social and environmental impacts can often by influenced by emotional appeals and popular concerns.

But here, Conrad introduces an interesting caveat. Suppose you built a super energy efficient house with hyper effective solar panels and ultra efficient appliances. And suppose further, with the help of an overly optimistic nature, that this house achieved and even exceeded the net zero energy status – resulting in a net “export” of energy to the grid. Would the utility have to consider this energy source as a cheaper energy source (than conventional generation)? The answer is no, because of the cross-subsidies that understate the real costs of providing retail electricity, Why is this so? Because the utilities usually prefer to book some fixed costs (poles and wires) as variable costs in order to artificially lower retail electricity rates for residential users. Thus, when we add these variable distribution costs to the exported power (from your “net-negative” home) the cost is much higher than the utility’s cost of generation.

Fundamental to this discussion of relative costs of power generation is a definition of the efficiency of energy generation. The second law of thermodynamics established the concept of entropy that explained how energy conversion always resulted in the loss of some of that energy. Lord Kelvin stated it as follows, “No process is possible in which the sole result is the absorption of heat from a reservoir and its complete conversion into work.” In other words, converting energy to power requires some loss. The degree to which this is true is the efficiency of the conversion process.

Most conventional generation Plants (except renewable energy sources) convert heat into electricity. The efficiency of this process is known as the heat rate, which measures the heat (expressed in Btu) required to create 1 kWh.

η (Heat Rate) = Btu of Heat to generate 1 kWh

The heat rate gives us a convenient way to compare the efficiency of various ways of converting energy into power. Conrad then proceeded to give a whole list of comparable conversion processes to illustrate their relative efficiency.

- Combined Cycle Combustion Turbine: Heat Rate ~7,000; η =49%

- New Coal Plant: Heat Rate 9,800; η = 35%

- Low Speed Diesel On Liquid Fuel: Heat Rate 8,540 η = 40%

- Nuclear Plant: Heat Rate 11,000 η = 31%

- Simple Combustion Turbine: Heat Rate 11,400 η = 30 %

- High Speed Diesel: Heat Rate 12,200 η = 28 %

- Automotive Diesel: Heat Rate 14,200 η = 24 %

- Typical 4 cylinder engine: Heat Rate 18,000 η = 19 %

- PV Panel: η = 6 to 35 % of energy in incident sunlight

Knowing the energy conversion rate is not enough to evaluate the practical costs of operating costs of a plant. To do this we need to look at the levelized plant operation costs that take into account the cost of the fuel and the maintenance costs for the plant – as well as the time during which the plant will actually be in operation.

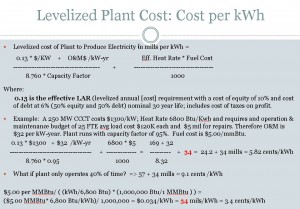

First we determine the levelized annual (cost) requirements (“LAR”) of the plant- expressed in mills per kWh. We multiply this LAR times the cost of the plant per KW capacity. This all-in plant cost is then divided by the utilization (expressed in mills) – that is, 8760 hours divided by 1000 times the capacity. The product of this fraction gives us the hourly cost of operation of the plant (expressed in mills) and adjusted to show the actu al usage. This capital cost (aka “overnight installation cost” ) represents the cost of building, financing and operating the plant on an hourly basis – and adjusted to reflect the actual percentage capacity at which the plant is utilized (expressed in mills).

al usage. This capital cost (aka “overnight installation cost” ) represents the cost of building, financing and operating the plant on an hourly basis – and adjusted to reflect the actual percentage capacity at which the plant is utilized (expressed in mills).

To complete the cost calculation we need to add the effective heat conversion rate and the fuel costs (expressed in mills). The combination of the construction and maintenance costs times the effective conversion rate at the prevailing fuel costs provides you with the levelized plant operational costs.

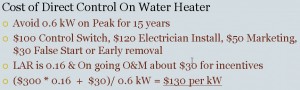

Conrad then presented several other examples of calculating the levelized costs for various projects such as a wind farm (8.5 cents/kWh), PV panel 3 KW home installation ($9.5 cents/kWh), D cell battery ($16/kWh). The Simple Cycle Combustion Turbine is the lowest cost plant that PGE can build. This is an important example because if we’re using this plant to deal with peak load for 87 hours per year – this is the cheapest resource they can build. At that point it costs $1.87 per kWh. But what’s more important is the fixed cost per kWh. This is the “bogie” of the demand response program. If you’re trying to beat the cost of building a simple cycle CT plant, the cost of the energy is no longer the relevant measure. What’s relevant is the cost to displace one KW of the existing capacity.

He also provide the calculation for a DR program.

One of the important points in this discussion centered on the idea that the variable cost (fuel) calculation makes a dramatic difference in setting the cost. Most capital intensive plants are very sensitive to part time operation, so even though the fixed costs stay the same the reduced operation time materially affects the levelized costs. This is a challenge for independent power producers which is why they rely on fixed contracts, otherwise the risk is way too high. For peaking plants that may run only about 1% of the time the cost of running the plant rises significantly, because most of the time the plant sits idle, but the financing and construction costs must still be born.

With respect to PV panels the efficiency of the conversion rate makes a difference since space is limited on roofs. Efficiency also makes a huge difference in the amount of land you have to purchase. If you have a large installation it will increase the amount of wires that you will have to use – that’s a significant cost.

In response to a question about German subsidies for PV installations which eventually became very prevalent, Conrad explained how the German subsidies that were as high as 40 cents per kW (versus 10 cent base rate) were effectively pushed to all the base rate users. That meant that a substantial subsidy had to be shared among all the other users. With ever greater numbers of installations these expanding subsidies were getting pushed to a smaller group and that eventually forced a reduction in the subsidies.

__________________________________________

– James Mater

James Mater is one of the founders of the Smart Grid Oregon – one of the nation’s first industry associations focused on promoting the advancement of Smart Grid technologies and companies that produce goods and services to support the successful implementation of Smart Grid. He is also the co-founder, Director and Smart Grid Evangelist of QualityLogic, a firm developing test and certification tools for the technology market, and currently expanding into verification of Smart Grid interoperability requirements.

James is also part of the teaching staff for this seminal Smart Grid course. This was his first contribution to the course.

His objective with this initial presentation was to help the students gain an appreciation for the challenges of achieving “plug and play” interoperability between smart grid components and applications. With the lecture he planned to identify the key organizations working on smart grid standards and the status of current efforts to achieve a national consensus on those standards.

He started out his presentation with by quoting from George Arnold’s testimony to Congress this past July.

In a word George Arnold was pointing out that efforts to achieve any sort of standardization are encumbered by what are effectively 3100 “silos”, each intent on seeking their own home grown solutions. Achieving any standardization would be a huge task, George Arnold warned. He ought to know. George Arnold came out of the telecom business where he helped to develop the wimax standard. However, in the telecommunications industry they only have 3 standards organizations, but Smart Grid has 15 standards organizations.

NIST echoed this concern when in their January 2010 Framework and Roadmap for the Smart Grid, they declared that the “lack of standards may also impede future innovation and the realization of promising applications“.

Yet the opportunity was enormous. In the same report NIST forecast that the, “U.S. market for Smart Grid-related equipment, devices, information and communication technologies, and other hardware, software, and services will double between 2009 and 2014—to nearly $43 billion…the global market is projected to grow to more than $171 billion, an increase of almost 150 percent.”

In an observation that may seem out of place in the highly regulated electricity market, NIST went to so far as to declare that “standards enable economies of scale and scope that help to create competitive markets in which vendors compete on the basis of a combination of price and quality“.

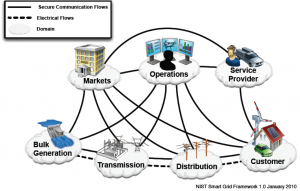

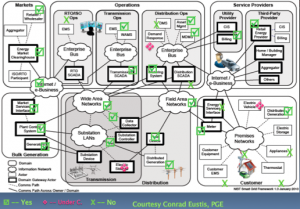

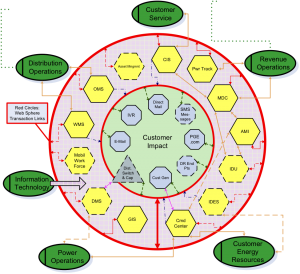

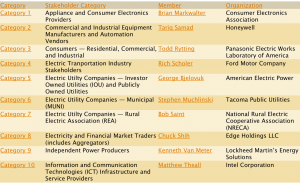

James Mater then reviewed the NIST conceptual model. In the terminology being used for Smart Grid discussions, each of these seven “cloud” categories is called a “domain.” Within any particular domain, there may be a number of different “stakeholders”. The framework being used by NIST to coordinate this effort identifies 22 stakeholder groups, from “appliance and consumer electronics providers” and “municipal electric utility companies” to “standards-development organizations” and “state and local regulators.”

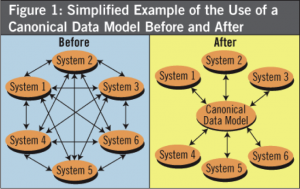

He also went on to consider how that model looked when overlaid over the existing structure of a utility, which had already moved towards some degree of proprietary automation.

These slides, already presented by Conrad Eustis, showed the difficulty of trying to achieve economies of scale on an incremental basis. Interoperability was achieved but in a proprietary manner that denied the benefits of economies of scale and innovation that accrues from open standards and competitive markets. Moreover, the challenge is even more complex when we consider the whole panoply of systems that are used in the context of a major utility.

Interoperability was achieved but in a proprietary manner that denied the benefits of economies of scale and innovation that accrues from open standards and competitive markets. Moreover, the challenge is even more complex when we consider the whole panoply of systems that are used in the context of a major utility.  Each of these systems, whether they be management software, or operational tools are supported by a proprietary enterprise service bus that communicates bilaterally, but not universally. This is the challenge that faces the designers of the interoperability standards that have to connect to many stand-alone systems.

Each of these systems, whether they be management software, or operational tools are supported by a proprietary enterprise service bus that communicates bilaterally, but not universally. This is the challenge that faces the designers of the interoperability standards that have to connect to many stand-alone systems.

This is the task that has been assigned to NIST – mapping the standards for Smart Grid.

Who is active developing the Smart Grid standards?

NIST has published 25 of the most important standards. Open ADR, which facilitates DR in commercial buildings, has just been published as a standard. NIST is now identifying the “critical” standards in every domain. So far there are about 33 standards which enjoy a degree of consensus. And there are another 70 for consideration…The standards for security are the last to get promulgated, in part because they are a foil for the nay-sayers. And to certain, security for the Smart Grid is a serious challenge that needs a solution that is at least as reliable as we expect when flying aircraft or banking.

So who are they key players involved in developing the Smart Grid standards?

- NIST

- Smart Grid Interoperability Panel

- GridWise Architecture Council (GWAC)

- UCA International

- GridWise Alliance

- EPRI/EEI

- Zigbee, Wi-Fi – low power radio inside buildings

- IEEE PES/2030 – institute for engineering

- SDO’s

- ISO – international standards; technology standards

- IEC – Geneva based – active in generation & transmission

- ANSI – working on meters

-

NAESB – industry group

-

NRECA

-

State Legislatures

-

Federal/State Regulators

-

FERC

-

NERC

-

State PUC’s

-

International standards bodies

- Oasis – good at internet standards

- Ashrae – heating & HVAC

- Bacnet – standard for commercial buildings

- OPC

The most active of these groups include the following:

The GridWise Architecture Council:

The GridWise Architecture Council, GWAC, is DOE sponsored and has 13 council members from different parts of the domain. Under the Energy Independence and Security Act (EISA) of 2007, the National Institute of Standards and Technology (NIST) has “primary responsibility to coordinate development of a framework that includes protocols and model standards for information management to achieve interoperability of smart grid devices and systems…” EISA requires that NIST consult with GWAC to define the standards and set up investment grants. The GWAC also sponsors 3 annual conferences:

- Connectivity week

- Gridweek

- Interop

The Gridwise Architecture Council has enormous influence. They are developing the context setting framework, and designed the GWAC stack, which is adapted from the highly successful OSI layered stack that helped to stimulate innovation in the computer industry.

To carry out its EISA-assigned responsibilities, NIST devised a three-phase plan to rapidly establish an initial set of standards. In April 2009, the new office launched the plan to expedite development and promote widespread adoption of Smart Grid interoperability standards:

- Engage stakeholders in a participatory public process to identify applicable standards, gaps in currently available standards, and priorities for new standardization activities.

- Establish a formal private-public partnership to drive longer-term progress.

- Develop and implement a framework for testing and certification.

Smart Grid Interoperability Panel (SGIP):

The Smart Grid interoperability panel (SGIP) is the way NIST interacts with industry. Some of the SGIP players came from GWAC. They are working on Smart Grid standards, developing priority action plans, and designing the testing and certification standards. SGIP developed the Smart grid conceptual model (see earlier graphics with various domains shown as clouds) and are working on the Smart Grid cyber security solutions. They are also working on the interopera

the testing and certification standards. SGIP developed the Smart grid conceptual model (see earlier graphics with various domains shown as clouds) and are working on the Smart Grid cyber security solutions. They are also working on the interopera bility knowledge base (IKB). Importantly they also run the SGIP Twiki for technical collaboration.This wiki is an open collaboration site for the Smart Grid (SG) community to work with NIST in developing this framework. The board of the SGIP is chosen from across industry and government.

bility knowledge base (IKB). Importantly they also run the SGIP Twiki for technical collaboration.This wiki is an open collaboration site for the Smart Grid (SG) community to work with NIST in developing this framework. The board of the SGIP is chosen from across industry and government.

SG Architectural Committee: Semantic Model work

A canonical data model (CDM) is a semantic model chosen as a unifying model that will govern a collection of data specifications.

NERC:

NERC Critical infrastructure protection (CIP) standards require compliance every 6 months. There is a $1 M penalty per violation per day. Industry was shell shocked by these requirements. Industry is very anxious about any standards that might be in conflict with the CIP. Actually the CIP is comprised of a whole family of standards – that essentially require you to document everything you do. These CIP standards were originally devised and implemented to prevent big blackouts – so they’re both rigorous and heavily enforced.

__________________________________________

Smart Power Pricing, Space and Time

Smart Power Pricing, Space and Time

-Ken Nichols

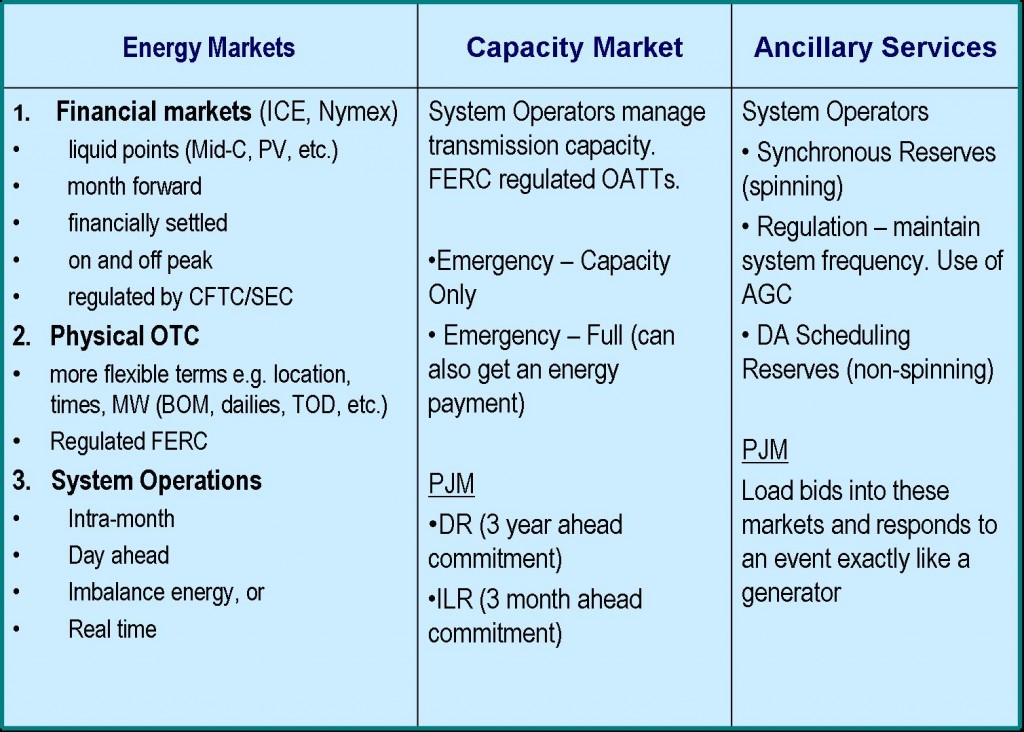

Electricity futures are the most volatile commodity…because it can’t be stored. Futures prices are based on quantity, location and time. This mirrors futures contracts in other commodities such as orange juice for which a typical future’s contract might specify:

Quantity: 15,000 lb of Frozen Concentrate OJ

Locati0n: exchange certified warehouses in California and Florida

Time: 1st business day of the month

For Natural Gas, the primary distribution point is known as “Henry Hub” which is located ii Louisiana. But other locations can be specified, though the price of natural gas delivered elsewhere will vary because of constraints and cost of transportation. This variance is called the “basis price”.

Apparently there are two variants to trading energy futures: financial trading instruments, referred to as “Space Contracts” and “Physical Contracts”. Physical contracts are traded bilaterally and are more likely to be delivered. Some “Space contracts” also offer a conversion price that allows buyers to secure a theoretical amount of energy, but then later convert the contract into a physical contract for actual delivery if the energy is ultimately needed.

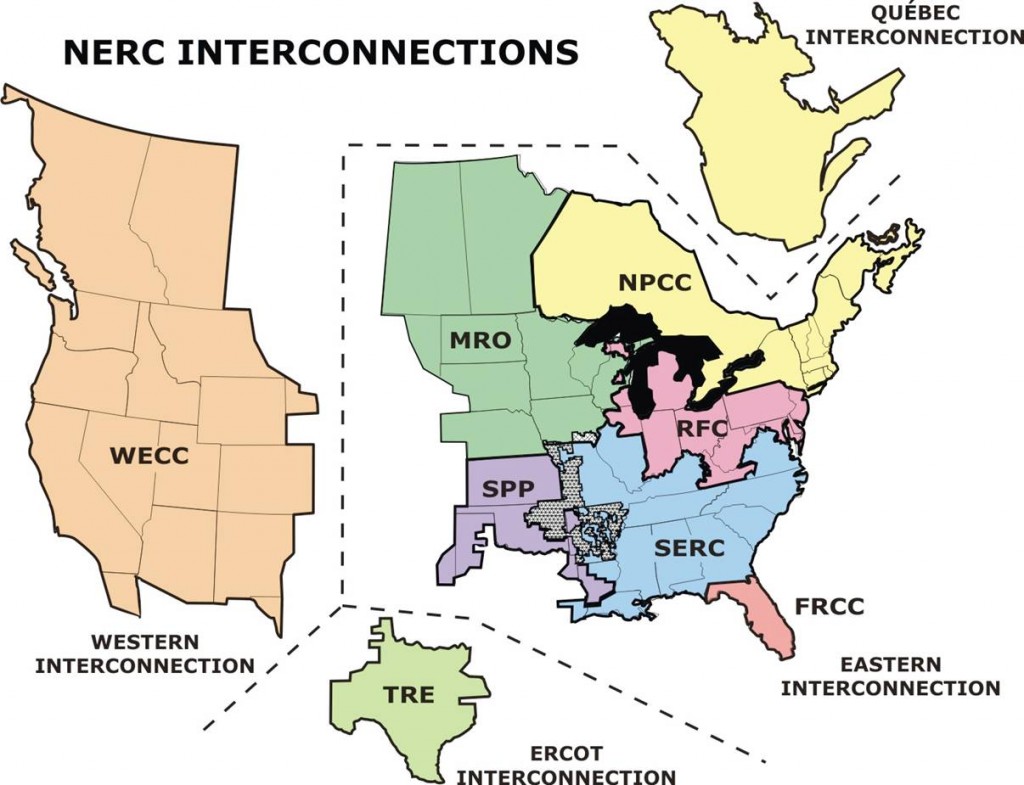

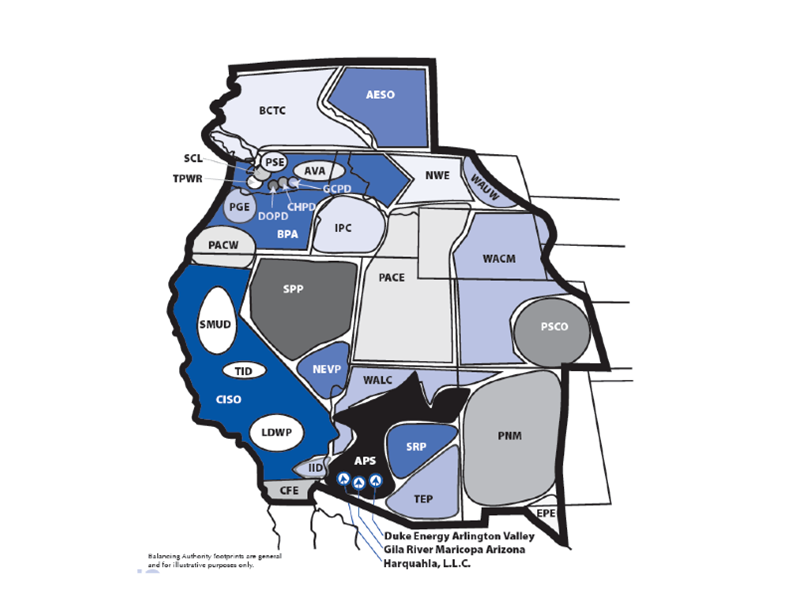

The NERC interconnections:

The North American Electric Reliability Corporation’s (NERC) mission is to ensure the reliability of the North American bulk power system. NERC is the electric reliability organization certified by the Federal Energy Regulatory Commission to establish and enforce reliability standards for the bulk-power system. NERC also maintains the large regional electric transmission networks that span North America.

NERC sets rules for regional system operation, reliability. It sets the reserve margin requirements and schedules transmission, etc.

The three systems that span North America are a contiguous AC system. They are linked by small DC inter ties. In particular there is a small utility in northern Texas, Tres Amigas, located in the ERCOT interchange that has limited connectivity to both the Western Electricity Coordinating Council (WECC) and to SW Power Pool (SPCC) which is part of the Eastern Interconnection. The following are the major transmission pools:

- Florida Reliability Coordinating Council (FRCC)

- Midwest Reliability Organization (MRO)

- Northeast Power Coordinating Council (NPCC)

- ReliabilityFirst Corporation (RFC)

- SERC Reliability Corporation (SERC)

- Southwest Power Pool, RE (SPP)

- Texas Reliability Entity (TRE)

- Western Electricity Coordinating Council (WECC)

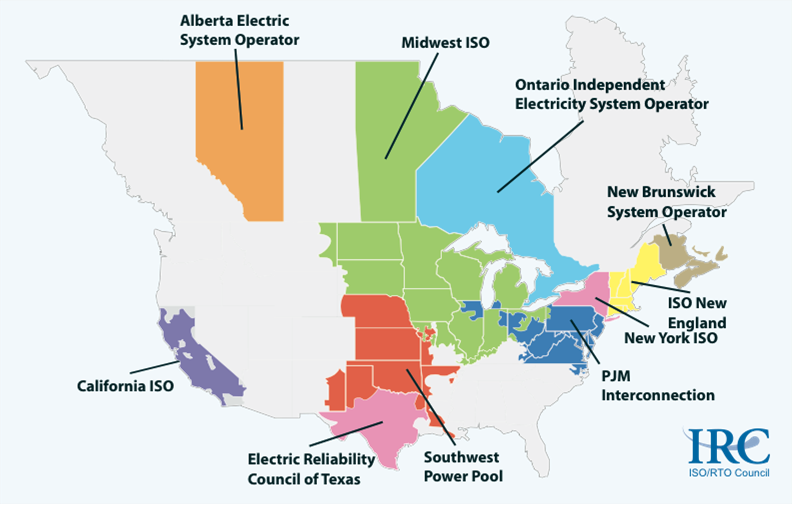

These players can be further distinguished as Independent System Operators (ISO’s) and Regional Transmission Operators (RTO’s). Both of these organizations are interested in improving the quality of the information supporting the market transactions and the effective consolidation of operations by the multiple owners of transmission. By driving down the physical barriers between the various regional electricity pools, NERC is encouraging increased integration of a national electricity market. For that reason it has not been fully embraced by Pacific Northwest regional energy providers, because they enjoy a substantial energy price advantage, as a result of the hydroelectric potential in the Northwestern region.

These players can be further distinguished as Independent System Operators (ISO’s) and Regional Transmission Operators (RTO’s). Both of these organizations are interested in improving the quality of the information supporting the market transactions and the effective consolidation of operations by the multiple owners of transmission. By driving down the physical barriers between the various regional electricity pools, NERC is encouraging increased integration of a national electricity market. For that reason it has not been fully embraced by Pacific Northwest regional energy providers, because they enjoy a substantial energy price advantage, as a result of the hydroelectric potential in the Northwestern region.

ISOs and RTOs are interested in market transactions and consolidated operations of multiple owners of transmission.

- Midwest Independent Transmission System Operator (MISO), an RTO despite the ISO in its name

- ISO New England Inc. (ISO-NE), an RTO despite the ISO in its name

- PJM Interconnection LLC (PJM)

Non-RTO transmission organizations:

ISO’s

- Alberta Electric System Operator (AESO)

- California ISO (CAISO)

- Electric Reliability Council of Texas (ERCOT)

- Independent Electricity System Operator (IESO), operates the Hydro One transmission grid for Ontario, Canada

- New York Independent System Operator (NYISO)

Ken Nichols discussed the formation of the RTO’s and ISO’s. He explained that, “the PJM, New England and New York ISO’s were established on the platform of existing tight power pools. It appears that the principal motivation for creating ISO’s in these situations was NERC Order No. 888 that required that there be a single systemwide transmission tariff for tight pools”. In contrast, Ken asserted that “the establishment of the California ISO and the ERCOT ISO was the direct result of mandates by state governments”. The Midwest ISO is unique; it was neither required by government nor based on an existing institution. Apparently, “two states in the region required utilities in their states to participate in either a Commission-approved ISO (which occurred in Illinois and Wisconsin), or sell their transmission assets to an independent transmission company that would operate under a regional ISO (applied in Wisconsin).”

The only ISO’s are Texas, California, and Alberta and the New York ISO (NISO). The Western half of the United States is dominated by the Western Energy Coordinating Council (WECC). California is an ISO; however SMUD, TID and LDWP are independent utilities operating inside California ISO. The CA ISO and AESO are only ISO’s in the western market. The rest of WECC are “Balancing authorities” and each of them has control of what’s coming in and out. In this region the BPA plays that role. Balancing Authorities are responsible for balancing schedules, managing transmission, and keeping the electricity at 60 Hz frequency.

PJM is the darling of the Smart Grid promoters. It is an RTO (facilitating transmission and markets across the several states in which it operates).

For energy traders operating in the western market there are several distinct locations upon which the prices are predicated. These include:

For energy traders operating in the western market there are several distinct locations upon which the prices are predicated. These include:

- COBB Mid Columbia,

- Four corners,

- Palo verde,

- South of Path 15

- North of Path 15

Pricing based for energy is based on the Platts month ahead pricing. It is divided into “On Peak” (6am to 10pm) and “Off Peak” (10pm to 6am). Trading is usually done in blocks of 25 mega watts.

The Energy Market handles both financial or futures markets, defined in specific delivery location requirements, delivery times, and quantity. The trades are cleared through an exchange or clearinghouse.

An example would be: “Mid-C, off peak, 25 MWh block, March delivery”.

Physical contracts can be same as financial, but bilateral trades (mainly financial/speculative) permits more flexibility in the terms.

The proposed WECC Energy Imbalance Market (EIM):

The proposed EIM is a sub-hourly, real-time energy market providing centralized, automated, generation dispatch over a wide area. However, unlike an RTO, it would not replace the current bilateral energy market, but would instead supplement the bilateral market with real-time balancing. The automation of the EIM would allow for a more efficient dispatch of the system by providing access to balancing services from generation resources located throughout the EIM footprint and optimizing the overall dispatch, while incorporating real-time generation capabilities, transmission constraints, and pricing.

While the EIM market design has many similarities to those administered by ISOs and RTOs, this proposal does not include implementing an RTO in the Western Interconnection. The EIM could utilize tools and algorithms that have been successfully implemented in other centralized markets, but an EIM would not include a consolidated regional tariff for basic transmission service (e.g. network or point-to-point). But the EIM would use a coordination tariff to address provision of generation and load energy imbalance, replacing some Ancillary Service Schedules of participating transmission provider tariffs.

Hi! Do you know if they make any plugins to

safeguard against hackers? I’m kinda paranoid about losing everything I’ve worked hard on.

Any recommendations?

I don’t think such an app exists, or could conceivably provide such protection. I would consult with local cyber security experts to determine a defensive profile that fits your needs.

Jim Thayer

PS. I used to work in cybersecurity for a company called Tripwire.

Excellent post. Keep posting such kind of info on your

site. Im really impressed by your blog.

Hi there, You have done a fantastic job. I’ll definitely digg it and

personally recommend to my friends. I’m confident they’ll be benefited from this site.